For years, activists have been worried that facial recognition software could end up putting the wrong people in jail.

In October 2018, someone stole nearly $4,000 in watches from a Detroit jewelry store. A Detroit man (who had no criminal record) was wrongly arrested on his front lawn in front of his wife and children.

Why?

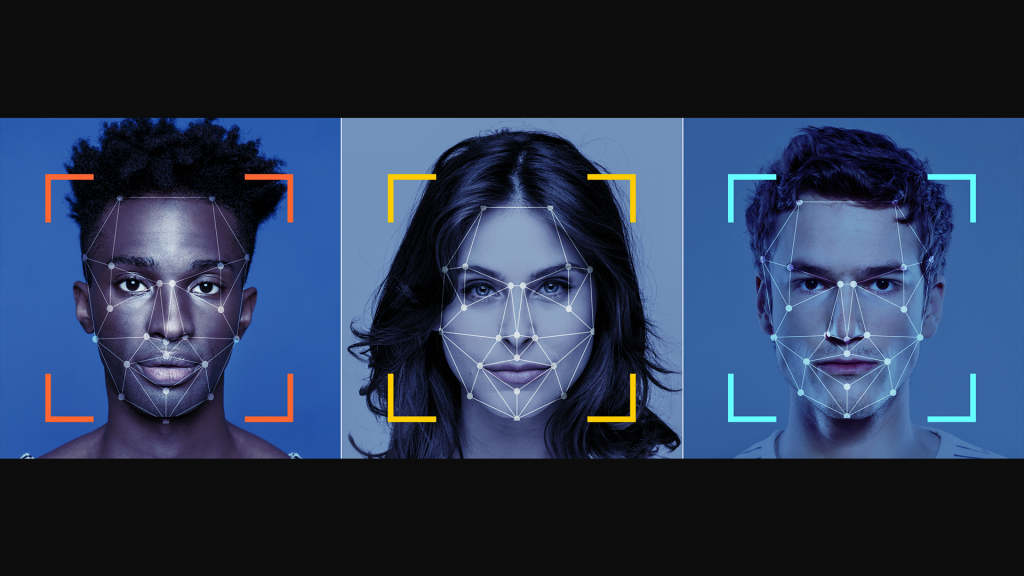

Because of an algorithm. Police fed one blurry image through a facial recognition system and it suggested a likely match with the accused’s drivers license photo. The man was later released, and charges were dropped, but the incident begs the question, is the software putting Black Americans at risk?

A few things to note:

1. Facial recognition is NOT probable cause to arrest.

2. Certain images should never be submitted for search.

3. Warranty standards must be up to par with normal legal requirements.

Does the technology amplify bias rather than fight it?

Researches have concluded that facial recognition algorithms often mis-identify more black faces than white faces. So, maybe the use of facial recognition software should be limited to violent crimes and home invasions. And it should certainly require that more than one experienced examiner review and agree with the results.

Cities like San Francisco and Boston have banned the use of companies from being able to sell facial recognition software to the police. The stakes of being under the scrutiny of the police are so high, its just not clear that you can create any kind of system that can be fair and un-bias.

Fierce Debate

Bottom line, there should never be a rush to judgement on guilt or innocence when using facial recognition software. Mis-identification of an arrest is humiliating. It can lead to a permanent arrest record, failed background checks, and much worse.

And there seems to be fierce debate among the people who study facial recognition. Whether you can somehow make the technology reliable enough that bias won’t play into it, or if facial recognition by its nature, it will always reflect the unfair treatment of Black Americans.